Imagine diving into the vast ocean of human language, where every ripple of meaning holds potential insights waiting to be discovered. At the heart of this exploration lies tokenization in NLP (Natural Language Processing), a foundational process that allows machines to understand and interact with human language. Tokenization serves as the first step in analyzing text, breaking it down into manageable pieces tokens that can be further processed and understood. In this article, we will navigate the depths of tokenization, examining its meaning, types, techniques, challenges, and applications, while also reflecting on how it shapes the future of NLP.

Introduction to Tokenization in NLP

But First, The Definition of Tokenization

To start, let’s clarify what tokenization is in NLP. In essence, tokenization is the process of splitting text into smaller units called tokens. These tokens can be words, subwords, or even characters, depending on the application and the language being processed. Think of tokenization as slicing a loaf of bread into individual pieces, where each slice represents a meaningful unit of the larger whole. This initial segmentation is crucial because it allows subsequent NLP tasks like translation, classification, or sentiment analysis to function effectively.

Tokenization acts as the gateway to understanding language data. By transforming a block of text into tokens, we enable machines to recognize patterns, analyze structures, and derive meanings from language, which ultimately enhances their ability to interact with us in a meaningful way.

Importance of Tokenization in Natural Language Processing (NLP)

Tokenization plays a critical role in enabling machines to interpret and process natural language. Proper tokenization can significantly improve the performance of NLP models in various tasks. For instance, consider a sentiment analysis model; if the text isn’t properly tokenized, the model might struggle to understand the nuances of language, such as idiomatic expressions or compound words.

Effective tokenization enhances the model’s ability to accurately classify the sentiment of a text. Similarly, in translation tasks, the precision of tokenization can determine the accuracy of the translated output. As we delve deeper into the world of NLP, it becomes clear that tokenization in NLP is not merely a preliminary step; it is a foundational process that directly impacts the success of various applications.

Types of Tokenization

| Tokenization Method | Description | Pros | Cons | Use Cases |

| Word Tokenization | Splits text into individual words based on whitespace and punctuation. | Simple and intuitive; effective for languages with clear word boundaries. | Struggles with out-of-vocabulary (OOV) words; may not handle compound words well. | Text classification, topic modeling |

| Character Tokenization | Treats each character as a token, providing a fine-grained representation. | Avoids OOV issues; useful for languages without clear word boundaries. | Generates long sequences, which can be challenging for models to learn from. | Handwriting recognition, specific language tasks |

| Subword Tokenization | Breaks words into smaller units (morphemes), balancing efficiency and semantic integrity. | Handles rare words effectively; reduces vocabulary size while maintaining coverage. | May still struggle with very complex morphological structures. | Machine translation, NLP tasks with rich morphology |

| Byte Pair Encoding (BPE) | Merges frequently seen character pairs to create new tokens, reducing vocabulary size. | Efficient handling of unseen words; maintains contextual meaning. | Requires careful tuning to optimize merge operations. | Neural machine translation, large NLP models |

| WordPiece | Similar to BPE but uses a greedy algorithm to build longer tokens first, indicating subword tokens with prefixes. | Enhances model understanding of word structure; balances word and subword representations. | Can lead to increased complexity in token management. | Google’s neural machine translation, BERT models |

| Unigram | A probabilistic model that selects the best tokenization based on likelihood from training data. | Balances vocabulary size and tokenization quality; adaptable to various applications. | May require extensive training data to function optimally. | General NLP tasks, sentiment analysis |

Word Tokenization

Word tokenization involves breaking down text into individual words or tokens. This method is one of the most common forms of tokenization and is particularly useful in applications where word-level meaning is essential. For example, in tasks like text classification or topic modeling, understanding each word’s contribution to the overall meaning is crucial. Imagine reading a book where every word plays a part in shaping the story; similarly, word tokenization allows us to extract insights from textual data.

Subword Tokenization

In contrast, subword tokenization focuses on breaking words into smaller units, often used in models like Byte Pair Encoding (BPE). This approach is particularly effective for handling rare or complex words, enabling models to generalize better. For instance, the word “unhappiness” could be split into “un,” “happy,” and “ness,” allowing the model to leverage its understanding of smaller components. This method addresses the challenge of out-of-vocabulary words, making it invaluable for languages with rich morphology.

Character Tokenization

Lastly, we have character tokenization, which splits text at the character level. This technique is especially useful for specific languages or applications where characters carry significant meaning, such as in Chinese or Japanese. Character tokenization can enhance performance in tasks like handwriting recognition or language modeling, where understanding the building blocks of words is essential.

Tokenization Techniques

| Tokenization Technique | Description | Advantages | Disadvantages | Use Cases |

| Rule-based Tokenization | Utilizes predefined rules (e.g., whitespace, punctuation) to determine token boundaries. | Simple to implement; effective for straightforward text. | Struggles with complex linguistic structures; may miss nuances in language. | Basic text processing, simple language models |

| Statistical Tokenization | Employs probabilities and patterns learned from large datasets to identify token boundaries. | Flexible and adaptable; can adjust based on context and linguistic features. | Requires large datasets for training; performance may vary based on data quality. | Natural language processing, context-sensitive tasks |

| Machine Learning-based Tokenization | Uses machine learning models to learn optimal tokenization strategies from diverse datasets. | Dynamic and robust; accommodates various languages and complex linguistic structures. | More complex to implement; requires significant computational resources for training. | Advanced NLP applications, multilingual processing |

Rule-based Tokenization

Rule-based tokenization relies on predefined rules, such as whitespace or punctuation, to determine token boundaries. This technique is straightforward and effective for simple language models. For example, splitting text based on spaces and punctuation marks can yield accurate tokens in straightforward cases. However, this method may struggle with more complex linguistic structures.

Statistical Tokenization

On the other hand, statistical tokenization employs probabilities and patterns to identify token boundaries. By analyzing large datasets, statistical methods can learn where tokens are likely to occur based on linguistic features. This approach allows for greater flexibility and adaptability, as the model can adjust its tokenization strategy based on the context of the text.

Machine Learning-based Tokenization

Machine learning-based tokenization takes the process a step further by learning tokenization strategies through ML models. This advanced method offers a dynamic approach, accommodating various languages and linguistic structures. By training on diverse datasets, these models can automatically determine token boundaries, ensuring robust performance across different applications.

Challenges in Tokenization

Despite its importance, tokenization is not without challenges. For instance, handling punctuation and special characters can complicate the tokenization process. The presence of symbols, abbreviations, and varying formatting can lead to ambiguity in determining token boundaries.

Additionally, dealing with different languages and scripts poses unique challenges. Each language has its own rules and structures, requiring tokenization techniques to adapt accordingly. For example, while whitespace is a common delimiter in English, languages like Chinese do not utilize spaces between words, necessitating entirely different strategies.

Lastly, the ambiguity in token boundaries presents a persistent challenge. Consider compound words or phrases where the meaning can shift depending on how tokens are defined. Such nuances require careful consideration in designing tokenization strategies to ensure accurate interpretation.

Applications of Tokenization in NLP

Tokenization serves as a backbone for various NLP applications. In text classification, for instance, it enhances data representation by allowing models to capture the significance of individual tokens. The more effectively a model can understand and classify text, the more accurate its predictions will be.

In sentiment analysis, tokenization enables the breakdown of text into its constituent parts, facilitating the detection of sentiments, whether positive, negative, or neutral. By analyzing the sentiment of customer reviews or social media posts, businesses can gain valuable insights into consumer opinions and trends.

Furthermore, in Named Entity Recognition (NER), proper tokenization is crucial for identifying named entities like people, places, or organizations. Accurate tokenization allows models to recognize and classify entities, enhancing their ability to extract information from unstructured text.

Tools and Libraries for Tokenization

Several tools and libraries facilitate the tokenization process in NLP.

- NLTK (Natural Language Toolkit)

NLTK is a widely used library for NLP tasks, providing a suite of tokenization capabilities. It offers simple functions for both word and sentence tokenization, making it an excellent choice for beginners and researchers alike.

- SpaCy

SpaCy is another powerful library known for its efficiency in processing large-scale data. Its tokenization features are designed to handle various linguistic structures, making it suitable for a wide range of applications. SpaCy’s performance in real-time NLP tasks makes it a favorite among developers.

- Hugging Face Transformers

Finally, Hugging Face has emerged as a leader in integrating advanced tokenization techniques with deep learning models. Their transformer models incorporate tokenization methods that enhance performance across diverse applications, particularly in handling context-rich tasks.

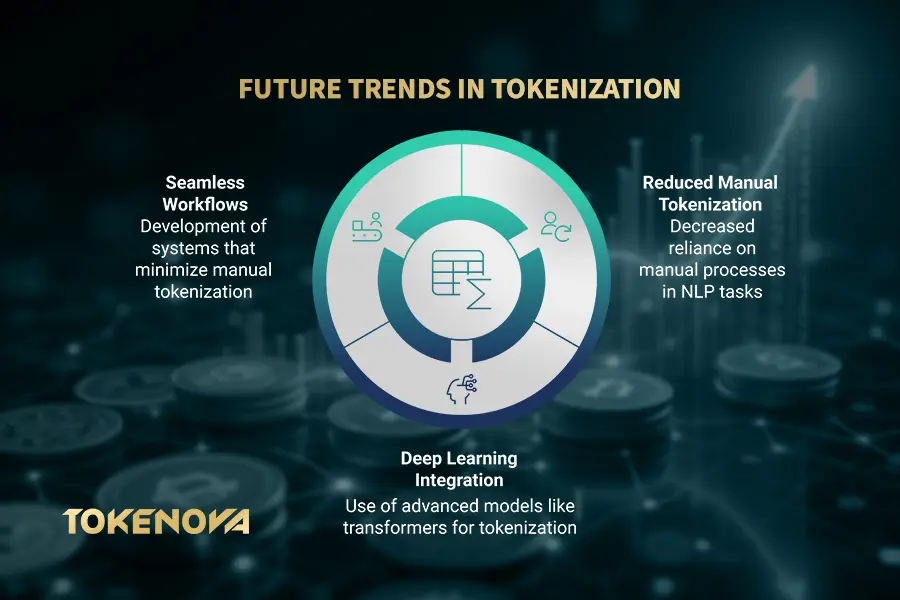

Future Trends in Tokenization

As we look to the future, several trends are shaping the evolution of tokenization. With the rise of deep learning techniques, models like transformers and BERT are revolutionizing how we approach tokenization. These models can leverage context to determine token boundaries more effectively, enhancing overall performance.

Additionally, we anticipate an integration of tokenization with other NLP components. As end-to-end systems become more prevalent, the need for manual tokenization may diminish. Future developments will likely focus on creating seamless workflows that reduce the complexities involved in language processing.

Tokenova Services

Tokenova is at the forefront of advancing tokenization services, bridging the gap between NLP and blockchain technology. Their offerings provide robust solutions for tokenization, ensuring businesses can leverage the power of language processing and blockchain seamlessly. In the realm of crypto, Tokenova supports projects by providing essential infrastructure for tokenized assets, contributing to the evolving landscape of digital finance.

Conclusion

In summary, tokenization in NLP is a vital process that serves as the foundation for understanding and processing human language. By breaking text into manageable units, we enable machines to analyze, interpret, and derive meaning from language data. The diverse types of tokenization, various techniques, and real-world applications underscore its significance across NLP tasks.

As we forge ahead into the future of language processing, the role of tokenization will continue to expand, enhanced by advancements in AI and deep learning. By embracing these innovations, we can look forward to a world where machines understand us with greater accuracy and nuance, ultimately enriching our interactions with technology.

What are some common challenges in tokenization?

Challenges include handling punctuation, adapting to different languages, and resolving ambiguity in token boundaries.

How does tokenization improve sentiment analysis?

Tokenization allows for the breakdown of text into individual units, enabling models to detect sentiments more accurately.

What is the role of NLTK in tokenization?

NLTK provides a suite of functions for easy word and sentence tokenization, making it accessible for researchers and developers.

How does machine learning enhance tokenization techniques?

Machine learning-based tokenization learns token boundaries automatically through models, adapting to various languages and structures.

What is the significance of subword tokenization?

Subword tokenization helps handle rare words and allows models to generalize better by breaking down words into smaller, meaningful units.