Tokenization in cyber security is a powerful technique that enhances data protection by replacing sensitive information with non-sensitive substitutes known as tokens. These tokens have no exploitable meaning or value, thus minimizing the risk associated with data breaches. In today’s digital age, where cyber threats are increasingly sophisticated and frequent, cyber security tokenization has become an essential component of an organization’s data security strategy.

Tokenization is particularly important in industries that handle vast amounts of sensitive data, such as finance, healthcare, and e-commerce. By implementing tokenization, organizations can not only protect their customers’ personal information but also ensure compliance with stringent data protection regulations like:

- Payment Card Industry Data Security Standard (PCI DSS)

- General Data Protection Regulation (GDPR)

- Health Insurance Portability and Accountability Act (HIPAA)

How Tokenization Enhances Data Protection

Tokenization enhances data protection by fundamentally changing how sensitive information is stored and accessed within an organization’s systems.

Protecting Sensitive Information

Data substitution is a fundamental aspect of tokenization, where sensitive data elements are replaced with non-sensitive tokens. For instance, a credit card number, such as “1234-5678-9012-3456,” can be substituted with a token like “TKN-0001-0002-0003.” These tokens do not carry any meaningful information about the original data, thereby enhancing security. The original sensitive data, along with its corresponding tokens, is securely stored in a specialized database known as a token vault.

Access to this vault is strictly controlled and monitored, ensuring that only authorized personnel or systems can retrieve the actual data when necessary. This setup allows systems that process transactions or perform analytics to operate using tokens, significantly reducing exposure to sensitive information by minimizing the number of systems that interact with the original data.

⚖️ Tokenova’s Cyber Team Says: Tokenization works by substituting sensitive data elements with a non-sensitive equivalent, referred to as a token, that has no extrinsic or exploitable meaning or value. This guide delves deep into how tokenization operates, its advantages over traditional encryption methods, and its critical role in modern cyber security frameworks.

Reducing the Risk of Data Breaches

Minimized attack surface is a critical advantage of utilizing a secure token vault for storing sensitive data. By limiting the amount of sensitive information within the organization’s infrastructure, potential points of vulnerability are significantly reduced, making it more challenging for attackers to find targets to exploit. In the unfortunate event of a security breach, cybercriminals would only acquire meaningless tokens rather than actual sensitive data, as the tokens cannot be reverse-engineered without access to the token vault.

This renders stolen data essentially useless. Additionally, tokenization plays a vital role in helping organizations comply with various regulations that require stringent protection of sensitive information. By meeting these compliance requirements, businesses can avoid legal penalties and mitigate the risk of reputational damage.

Compliance with Data Protection Regulations

- PCI DSS: For organizations handling payment card information, tokenization helps meet PCI DSS requirements by reducing the scope of environments that need to be compliant. According to the PCI Security Standards Council, tokenization can minimize the number of system components for which PCI DSS compliance is required.

- GDPR: The European Union’s GDPR imposes strict regulations on how personal data is processed and stored. Tokenization can help organizations pseudonymize personal data, which is encouraged under GDPR to enhance data protection.

- HIPAA: In the healthcare industry, HIPAA requires the protection of patient health information. Tokenization can ensure that electronic health records are secured, thus maintaining patient confidentiality and trust.

⚖️ Tokenova’s Cyber Team Says: By implementing tokenization, organizations can demonstrate a proactive approach to data protection, which is increasingly important in a world where data breaches can lead to significant financial and reputational harm.

Tokenization vs. Encryption

While both tokenization and encryption are methods used to protect sensitive data, they differ significantly in their approaches and use cases.

When it comes to protecting sensitive data, tokenization and encryption are two of the most widely used methods. While both aim to secure information, their approaches, strengths, and use cases differ significantly. Understanding these differences can help you determine which solution is best for your needs.

Key Differences Between Tokenization and Encryption

How They Transform Data

- Encryption converts readable data into an unreadable format using algorithms and encryption keys. With the correct key, the data can be decrypted back to its original form, making it ideal for scenarios where data needs to be protected but still usable.

- Tokenization replaces data with a token a random placeholder that has no mathematical link to the original data. Tokens can’t be reversed without access to the token vault, making this approach inherently more secure.

Where and How Data is Stored

- Encryption stores encrypted data within systems, which can be used without exposing sensitive details unless decrypted. However, once decrypted, the original data becomes vulnerable.

- Tokenization stores sensitive information only in the token vault. Tokens, not the actual data, are used in operational systems, significantly reducing the risk of exposure.

Performance Impact

- Encryption can be resource-intensive, especially with large datasets. Decrypting data for processing can introduce delays, impacting system performance.

- Tokenization generally has a lighter performance impact since tokens are processed directly, making it better suited for environments requiring high-speed operations.

Advantages of Tokenization Over Encryption

- Simplified Compliance:

With tokenization, sensitive data is removed from main systems, reducing the scope of audits and compliance requirements for regulations like PCI DSS and HIPAA. - Enhanced Security:

Unlike encrypted data, which can potentially be decrypted by attackers, tokens have no mathematical relationship to the original data. Without the token vault, tokens are meaningless. - Operational Efficiency:

Tokens can be formatted to mimic the structure of the original data, simplifying processes like database queries and analytics while maintaining security.

When to Use Tokenization vs. Encryption

Tokenization is often the better choice when certain conditions are present. For instance, if compliance or business needs dictate that sensitive data be completely removed from systems, tokenization serves as the ideal solution. It is especially beneficial in industries like healthcare and finance, where it helps reduce compliance burdens. Tokenization is typically used to safeguard specific data types such as payment card information, Personally Identifiable Information (PII), and Protected Health Information (PHI).

On the other hand, encryption is more advantageous in scenarios where secure transmission is crucial. It excels at securing data as it travels over networks, such as during email exchanges or web transactions. Additionally, encryption is preferable when there is a need for data to remain usable in its encrypted form, allowing for activities like searching or analysis. Moreover, encryption provides a secure and reversible method of protection, enabling authorized parties to decrypt the data when necessary.

Tokenization vs. Encryption: Comprehensive Feature Comparison Table

| Feature | Tokenization | Encryption |

| Data Transformation | Replaces data with a token; no reverse possible | Converts data to unreadable format; reversible with key |

| Storage Requirements | Sensitive data stored only in a token vault | Encrypted data can be stored but exposes sensitive info when decrypted |

| Performance Impact | Less impact on performance | Can be computationally intensive, affecting speed |

| Compliance | Simplifies compliance audits | Compliance scope remains extensive |

| Security | Virtually impossible to reverse tokens | Can be decrypted if keys are compromised |

⚖️ Tokenova’s Cyber Team Says: Many organizations implement both tokenization and encryption to create a layered security approach, combining the strengths of each method.

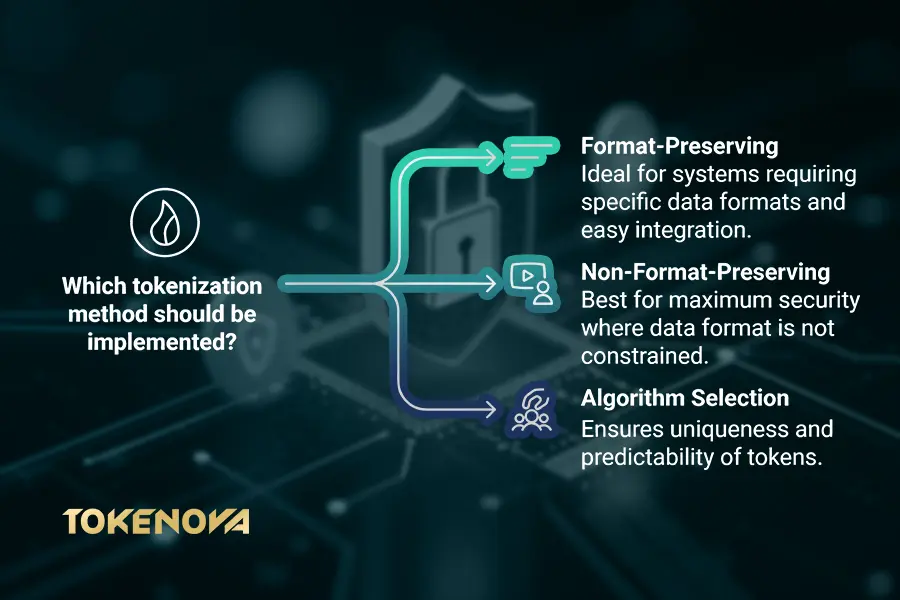

Tokenization Methods and Technologies: Finding the Right Fit

Tokenization is a powerful tool for protecting sensitive data, but it’s not a one-size-fits-all solution. Depending on your organization’s needs, you can choose from a variety of methods and technologies, each with its own strengths. Let’s dive into the key approaches and explore how to implement tokenization effectively.

Format-Preserving Tokenization: A Seamless Fit

With format-preserving tokenization, tokens mimic the structure and format of the original data. For example, a tokenized Social Security number still looks like a nine-digit number. This makes it a perfect choice for systems and applications that require data in a specific format.

The biggest advantage of this method is its ease of integration. Systems don’t need to be reconfigured to handle tokenized data, so it’s ideal for legacy databases or situations where making changes to the underlying schema isn’t practical. It also ensures a consistent user experience, as tokenized data remains recognizable and functional.

This approach works especially well in payment processing systems where card numbers must meet certain validation criteria, or in databases where altering the structure would be disruptive.

Non-Format-Preserving Tokenization: Prioritizing Security

If security is your top priority, non-format-preserving tokenization might be the way to go. Unlike the format-preserving method, these tokens don’t resemble the original data at all. They could be alphanumeric strings or randomized sequences that make it virtually impossible to infer anything about the original data.

This method shines in back-end processes or internal systems where data format isn’t constrained. It’s particularly valuable in environments where maximum security is critical, as the lack of any connection to the original data format adds an extra layer of protection.

Choosing the Right Algorithms and Standards

The way tokens are generated also plays a big role in their security and usability. Two common approaches include random number generation, which ensures tokens are unique and unpredictable, and deterministic tokenization, where the same input always maps to the same token. Deterministic tokenization is especially helpful for analytics, as it allows consistency without exposing the original data.

Adhering to established standards like NIST SP 800-57 for key management or ANSI X9.119-2-2017 for financial tokenization ensures that your implementation meets industry benchmarks for security and compliance.

How to Implement Tokenization Effectively

Implementing tokenization isn’t just about choosing the right method it’s about building a system that works smoothly with your organization’s processes while maintaining strong security.

Put into steps, follow these three:

- Start by securing the token vault, which stores the original sensitive data. This means encrypting the vault, setting strict access controls, and keeping detailed audit logs to track activities. A well-secured vault ensures that even if your main systems are compromised, the sensitive data remains protected.

- Next, think about integration. Tokenized data needs to work with your existing applications and workflows, so compatibility is key. Plan for adjustments to data processing systems, but aim to minimize disruptions.

- Finally, optimize for performance. If your business handles high transaction volumes, caching frequently accessed tokens can reduce retrieval times. Make sure your tokenization solution is scalable so it can grow alongside your data needs.

Making It Work for Your Organization

The right tokenization strategy requires collaboration. Your IT team, security experts, and business leaders need to align on goals, ensuring the solution fits both technical and operational requirements. With the right approach, tokenization doesn’t just protect your data it empowers your organization to operate securely, efficiently, and with confidence.

By understanding the methods and technologies available, you can tailor a tokenization strategy that not only safeguards your sensitive information but also integrates seamlessly into your business processes. It’s about finding the balance between security, usability, and scalability.

Benefits of Tokenization in Cyber Security

Tokenization offers numerous benefits that extend beyond basic data protection.

Enhanced Security Measures

Data Minimization: By storing less sensitive data within operational systems, the overall risk profile is reduced.

Resilience to Attacks: Even if an attacker gains access to systems, the absence of actual sensitive data limits potential damage.

Layered Defense: Tokenization complements other security measures like firewalls and intrusion detection systems, creating a more robust defense strategy.

Simplified Compliance Processes

✅Reduced Audit Scope: Fewer systems handle sensitive data, simplifying compliance audits and reducing associated costs.

✅Streamlined Reporting: Simplifies the process of demonstrating compliance to regulators and stakeholders.

✅Policy Enforcement: Easier to enforce data handling policies when sensitive data is centralized in a secure token vault.

Cost-Effectiveness and Scalability

✅Lower Operational Costs: Reduces the need for expensive security infrastructure across all systems.

✅Scalability: Tokenization solutions can scale to handle increasing data volumes without significant additional investment.

✅Future-Proofing: As regulatory requirements evolve, tokenization provides a flexible foundation for adapting to new compliance standards.

Improved Customer Trust and Confidence

✅Brand Differentiation: Demonstrating a commitment to data security can be a competitive advantage.

✅Customer Retention: Clients are more likely to remain loyal to organizations that prioritize the protection of their personal information.

✅Risk Mitigation: Reduces the likelihood of public relations crises resulting from data breaches.

⚖️ Tokenova’s Cyber Team Says: According to a survey by PwC, 85% of consumers will not do business with a company if they have concerns about its security practices.

Common Use Cases for Tokenization

Tokenization is versatile and applicable across various industries and scenarios.

Payment Processing and PCI DSS Compliance

Card-on-file transactions are increasingly common in e-commerce, where businesses store tokens instead of actual card data for repeat purchases, enhancing security by reducing the risk of sensitive information being compromised. Point-of-sale systems in retail also use tokenization to protect payment information during in-store transactions. Additionally, mobile payment apps like Apple Pay and Google Pay employ tokenization to secure data, allowing users to make quick purchases without exposing their actual card details. Overall, tokenization is vital for enhancing security across various payment platforms.

Protecting Personally Identifiable Information (PII)

Customer Data Management involves the use of CRM systems that employ tokenization to protect personally identifiable information (PII). This method prevents unauthorized access while ensuring that customer service operations can continue without interruption. Similarly, in Employee Records, Human Resources (HR) departments utilize tokenization to secure sensitive information, including salary details and personal addresses. The Education Sector also benefits from this technology, as schools and universities protect student records, such as grades and personal information, through tokenization. This comprehensive approach helps safeguard sensitive data across various domains.

Securing Health Information (HIPAA Compliance)

Electronic Medical Records (EMR) are vital in healthcare as hospitals and clinics tokenize patient data to comply with HIPAA regulations and ensure privacy. This process allows researchers to use patient data in pharmaceutical studies without revealing identities, thereby advancing medical knowledge safely. Similarly, health insurance companies utilize tokenization to protect policyholder information, reducing the risk of identity theft and fraud within the healthcare system.

Tokenization in Cloud Security

Organizations migrating to the cloud often use tokenization to protect sensitive data during and after the process. This approach ensures consistent data protection across multi-cloud environments and is also employed by SaaS providers to safeguard client information stored and processed in the cloud.

⚖️ Tokenova’s Cyber Team Says: As cloud adoption grows, tokenization becomes increasingly critical in maintaining data security in distributed environments.

Challenges and Considerations in Tokenization

Implementing tokenization offers numerous benefits, but it also comes with its own set of challenges. From system compatibility to ongoing maintenance, organizations need to address these hurdles to ensure a successful deployment. Let’s explore the key challenges and what you can do to overcome them.

Implementation Challenges

Getting started with tokenization often requires significant effort. For businesses with older systems, compatibility can be an issue. Many legacy systems are not built to support tokenization, requiring extensive modifications or even complete overhauls to accommodate the technology.

Additionally, resource allocation is critical. Implementing tokenization requires investment in both technology and personnel training. Employees need to understand new workflows and security protocols, making change management a key aspect of the process. Without proper education and buy-in, the transition to tokenization can face unnecessary roadblocks.

Managing Token Mappings

A secure and reliable token vault is the backbone of any tokenization system. If the vault is compromised, the entire system’s security is at risk. Organizations must ensure that the vault is encrypted, access is tightly controlled, and activities are logged to prevent unauthorized access.

Data synchronization across systems adds another layer of complexity. Token mappings need to be updated consistently, especially when working with multiple applications or databases. Additionally, having a robust backup and recovery plan is non-negotiable. Any loss of token mappings could disrupt operations and put sensitive data at risk.

Performance Implications

Tokenization systems can introduce latency, particularly in real-time environments like payment processing or financial transactions. Addressing these latency issues requires careful optimization of tokenization workflows.

Scalability is another consideration. The system must be able to handle peak loads without performance degradation, especially for businesses dealing with high transaction volumes. This requires planning for growth and ensuring the tokenization provider can scale with your business.

To ensure smooth operations, continuous monitoring and maintenance are essential. Regular performance checks help identify bottlenecks early, allowing teams to resolve issues before they impact operations.

Choosing the Right Tokenization Provider

Selecting the right provider is a critical decision that can make or break your tokenization strategy. Here are the key factors to consider:

- Security Credentials: The provider should comply with industry standards such as PCI DSS, GDPR, or HIPAA and hold relevant certifications.

- Customization Options: Your tokenization needs may vary, so the provider’s solution should be flexible enough to adapt to your specific requirements.

- Service Level Agreements (SLAs): Ensure the provider guarantees uptime, performance benchmarks, and support response times.

- Reputation and Experience: Look for a provider with a proven track record and positive feedback from other clients in your industry.

⚖️ Tokenova’s Cyber Team Says: Conducting thorough due diligence when choosing a provider ensures long-term reliability, security, and scalability.

Best Practices for Implementing Tokenization

Adhering to best practices can significantly enhance the effectiveness of tokenization initiatives.

- Assessing Organizational Needs

- Comprehensive Data Audit: Identify all sources of sensitive data within the organization.

- Risk Assessment: Evaluate the potential impact of data breaches on different data types.

- Regulatory Requirements: Understand the specific compliance obligations relevant to your industry and region.

- Choosing the Appropriate Tokenization Method

- Format Requirements: Determine whether format-preserving tokens are necessary for system compatibility.

- Security Level: Assess the sensitivity of the data to decide on the complexity of the tokenization method.

- Operational Impact: Consider how different methods will affect processing speed and system performance.

- Ensuring Seamless Integration with Existing Systems

- Pilot Programs: Start with a small-scale implementation to test integration points and identify issues.

- Cross-Functional Teams: Involve stakeholders from IT, security, compliance, and business units to ensure all perspectives are considered.

- Vendor Support: Leverage the expertise of the tokenization provider for best practices in integration.

- Regularly Reviewing and Updating Tokenization Strategies

- Continuous Monitoring: Implement systems to detect anomalies or unauthorized access attempts.

- Periodic Audits: Regularly assess the effectiveness of tokenization and make necessary adjustments.

- Stay Informed: Keep abreast of new threats, technological advancements, and changes in regulatory requirements.

- Employee Training and Awareness

- Security Training: Educate staff on the importance of tokenization and their role in maintaining data security.

- Access Controls: Ensure that only authorized personnel have access to sensitive systems.

- Incident Response Plan: Develop and regularly update a plan for responding to security incidents.

By following these best practices, organizations can maximize the benefits of tokenization while minimizing potential risks.

Future Trends in Tokenization and Cyber Security

The landscape of tokenization and cyber security is continually evolving, with new technologies and regulations shaping its future.

Advances in Tokenization Technologies

- Blockchain Integration: Leveraging blockchain for tokenization can enhance security through decentralized validation and immutable ledgers.

- Artificial Intelligence (AI): AI and machine learning algorithms can improve tokenization processes by identifying patterns and optimizing token generation.

- Quantum-Resistant Tokenization: Preparing for future threats posed by quantum computing by developing tokenization methods resistant to quantum attacks.

Integration with Emerging Technologies

- Internet of Things (IoT): As IoT devices proliferate, tokenization can secure the vast amounts of data generated.

- Edge Computing: Implementing tokenization at the edge can enhance data security in distributed networks.

- Zero Trust Security Models: Tokenization aligns with zero trust principles by minimizing data exposure and enforcing strict access controls.

Evolving Regulatory Landscape

- Global Data Protection Laws: New regulations like Brazil’s LGPD and California’s CCPA expand the need for robust data protection strategies.

- Industry-Specific Compliance: Sectors like finance and healthcare may introduce additional standards, necessitating updates to tokenization practices.

- Cross-Border Data Transfers: Tokenization can facilitate compliance with data residency laws by keeping sensitive data within specific jurisdictions.

⚖️ Tokenova’s Cyber Team Says: Staying ahead of these trends will enable organizations to maintain strong security postures and adapt to changing requirements.

At Tokenova, we provide tailored tokenization solutions, guiding you through the entire process to meet your unique business needs. Whether in finance, healthcare, or retail, our goal is to secure your data and help your business thrive.

Why Choose Tokenova?

Tailored Solutions: We align your tokenization systems with your business operations.

Expert Guidance: We help navigate regulatory requirements and optimize your infrastructure.

Comprehensive Support: From onboarding to ongoing assistance, we’re with you every step of the way.

Conclusion

Tokenization represents a significant advancement in the field of cyber security, offering robust protection for sensitive data in an increasingly interconnected world. By replacing actual data with tokens, organizations can mitigate the risks associated with data breaches, streamline compliance efforts, and foster greater trust with customers and partners.

Adopting tokenization is not merely a technical decision but a strategic one that reflects an organization’s commitment to security and privacy. As cyber threats continue to evolve, and as data protection regulations become more stringent, tokenization will play an integral role in safeguarding information assets.

⚖️ Tokenova’s Cyber Team Closes by Saying: Organizations that proactively implement tokenization position themselves for long-term success by protecting their most valuable resource: data.

Key Takeaways

- Tokenization is a crucial tool in cyber security, offering enhanced protection for sensitive data by replacing it with non-sensitive tokens.

- It provides advantages over encryption, including reduced compliance scope, simplified data handling, and improved security.

- Tokenization is applicable across various industries and use cases, from payment processing to healthcare data protection.

- Staying informed about future trends and best practices ensures that tokenization strategies remain effective in the face of evolving cyber threats.

How does tokenization affect data analytics and reporting?

Tokenization can complicate data analytics if not properly implemented. To address this, organizations can use deterministic tokenization, which ensures that the same input always results in the same token. This allows for meaningful aggregation and analysis without exposing sensitive data.

Is tokenization compatible with cloud services and platforms?

Yes, tokenization is compatible with cloud services. Many cloud providers offer tokenization as part of their security services, and third-party solutions can be integrated with cloud platforms to protect data stored and processed in the cloud.

What is the difference between tokenization and data masking?

Tokenization replaces sensitive data with tokens and stores the original data in a secure vault, suitable for both production and non-production environments. Data masking obscures data by altering it in a way that it cannot be reversed, typically used in non-production environments like testing and development where real data is not required.