In today’s digital landscape, data is as valuable as currency, making the protection and effective utilization of sensitive information paramount for businesses. Tokenization in Data Analytics emerges as a pivotal solution, offering robust security measures while preserving data usability for advanced analytics. According to recent studies, data breaches cost businesses an average of $4.24 million annually, underscoring the urgent need for effective data protection strategies.

This comprehensive guide delves into the intricacies of tokenization, exploring how it transforms sensitive data into secure tokens without compromising analytical capabilities. As industries grapple with stringent data privacy regulations like GDPR, HIPAA, and PCI DSS, understanding and implementing tokenization becomes essential for maintaining compliance and safeguarding critical information. Whether you’re in finance, healthcare, or e-commerce, this article provides actionable insights into leveraging tokenization to enhance both security and analytical power, ensuring your data-driven strategies are both effective and secure.

What is Tokenization, and How Does It Work in Data Analytics?

Tokenization is a process that replaces sensitive data with unique, non-sensitive identifiers known as tokens. These tokens retain the format of the original data but are meaningless outside the system that generates and maps them. Unlike encryption, which relies on reversible algorithms and keys, tokenization involves storing sensitive data securely in a token vault, with tokens acting as substitutes. This separation ensures that even if a tokenized database is breached, the original data remains protected.

How Tokenization Works in Data Analytics

- Token Generation: When sensitive data (e.g., a credit card number) is entered into a system, a tokenization platform replaces it with a randomly generated token that mirrors the original format.

- Storage in Token Vault: The sensitive data is securely stored in a token vault, ensuring that it can only be accessed through authorized requests.

- Token Usage: Tokens can be used in place of the actual data for analytics, machine learning, or other operational purposes without revealing the sensitive details. For example, tokenized datasets allow businesses to perform customer segmentation while preserving privacy.

- Data Retrieval (If Needed): In scenarios requiring access to the original data, authorized systems or users can map tokens back to their sensitive counterparts via the token vault.

Benefits of Data Analytics

- Preserves Data Usability: Tokens maintain the structure and format of the data, allowing seamless integration with analytics tools and workflows.

- Enhances Security: Sensitive data is protected in secure vaults, ensuring compliance with regulations like GDPR and HIPAA.

- Supports Real-Time Analytics: Unlike encryption, tokenization allows immediate processing of data without decryption, enabling insights on the fly.

For instance, an e-commerce platform can analyze purchasing patterns using tokenized customer data, improving personalization without exposing personal information. By combining security with accessibility, tokenization becomes a cornerstone for data analytics in a privacy-conscious world.

Benefits of Tokenization in Data Analytics

Tokenization provides numerous advantages, particularly for organizations handling large volumes of sensitive data. Balancing data security with analytics usability is a key reason for its widespread adoption across various industries.

How Tokenization Enhances Data Security and Privacy

Keeping sensitive data secure is a top priority for businesses today, and tokenization offers a smart, effective way to do it. By swapping out sensitive information with harmless tokens, companies can protect valuable data while still using it for essential tasks like analytics and operations. Even if hackers get their hands on a tokenized database, those tokens are completely useless without access to the secure token vault.

Real-World Benefits of Tokenization

- Fewer Data Breaches: Studies show that businesses using tokenization experience a significant drop in breach incidents. That’s because sensitive data is kept out of reach.

- Lower Fraud Risks: According to Forrester, tokenization reduces fraud risks , thanks to secure data handling in everything from payments to analytics.

Why Tokenization Works

The beauty of tokenization is its simplicity. Tokens are designed to look like the original data think of a credit card number replaced with a similar-looking token but they’re completely useless on their own. The real data stays locked away in a highly secure token vault. Unlike encryption, there’s no decryption key to steal, making tokenization even safer.

Expert Perspective

For companies that rely on data-driven insights but need to keep customer trust intact, tokenization is a no-brainer. It’s a modern, practical solution to a big problem and it’s proving its worth across industries like finance, healthcare, and retail.

Usability for Real-Time Analytics

Unlike encryption, which often requires data decryption before analysis, tokenization allows data to remain in a usable format while still protecting the underlying sensitive information.

🗃️Data Analyst Tip: Businesses can analyze tokenized data in real-time without exposing sensitive details, making tokenization ideal for industries that rely on data-driven decision-making.

Integration with Analytical Tools:

- Business Intelligence Platforms: Tools like Tableau and Power BI can seamlessly integrate with tokenized datasets, enabling real-time dashboards and reporting without accessing sensitive data.

- Machine Learning Models: Tokenized data can be used to train machine learning models, ensuring that sensitive information remains protected during the training process.

Granular Analytics Capabilities

Tokenization offers more than just data security it opens doors to powerful, privacy-preserving analytics. By retaining the structure of the original data, businesses can extract valuable insights while ensuring sensitive information remains protected.

For instance, e-commerce giants leverage tokenized customer data to uncover purchasing trends, enabling highly targeted marketing campaigns without ever exposing personal details. This not only enhances customer engagement but also builds trust by keeping their data safe.

In supply chain management, tokenized sales data empowers companies to forecast inventory needs, optimize stock levels, and streamline operations, resulting in cost savings and improved efficiency. Meanwhile, in finance, tokenization facilitates fraud detection by allowing analysis of transaction patterns to flag suspicious activity all without revealing actual account or payment details.

By combining privacy compliance with data usability, tokenization proves itself as a cornerstone for businesses seeking to innovate responsibly. It’s a smart solution that enables real-time insights without the risk of exposing sensitive data.

Compliance with Data Protection Regulations

Tokenization helps businesses comply with stringent data protection regulations like GDPR, HIPAA, and PCI DSS. These regulations often require companies to protect sensitive information at all times, and tokenization ensures that sensitive data is not exposed during operations like payment processing or data analysis.

Alignment with Regulatory Requirements:

- GDPR: Tokenization aids in achieving data minimization and pseudonymization, key principles under GDPR.

- HIPAA: Protects PHI by ensuring that medical records are tokenized, reducing the risk of unauthorized access.

- PCI DSS: Helps meet requirements for securing payment card data by replacing sensitive card numbers with tokens.

Regulatory Endorsements: “The adoption of tokenization is highly recommended for organizations seeking to comply with data protection laws, as it significantly reduces the risk of data breaches,” states the European Data Protection Board.

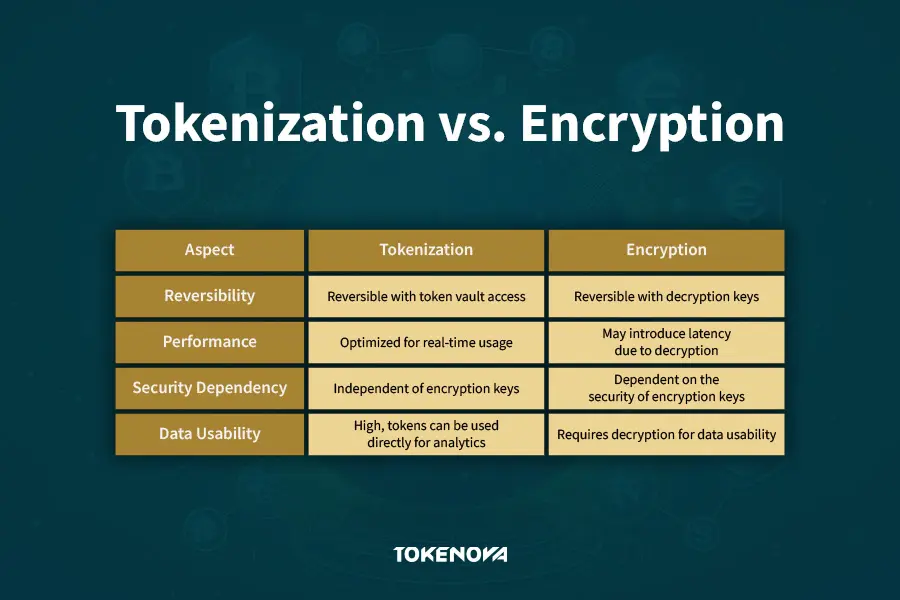

Tokenization vs. Other Data Protection Methods

When it comes to protecting sensitive data, there’s no one-size-fits-all solution. Different methods serve different needs, and understanding how tokenization compares to encryption and data masking can help you make the best choice for your organization.

Understand the differences between tokenization and encryption to choose the right data protection method for your needs.

Tokenization vs. Encryption

Think of encryption as putting your data in a locked box that only a specific key can open. While secure, the data needs to be unlocked (decrypted) every time it’s used, which can slow things down and leave it vulnerable during the process. Tokenization, on the other hand, replaces sensitive data with tokens that look similar but are completely meaningless without access to a secure system. This means you can use the tokens for analytics or operations without exposing the original data.

❔Why choose tokenization? If you need real-time data access, like analyzing customer behavior or processing payments, tokenization is faster and less risky.

❔Why choose encryption? Encryption is a great choice for securing data that doesn’t need to be accessed frequently, like archived records or files in storage.

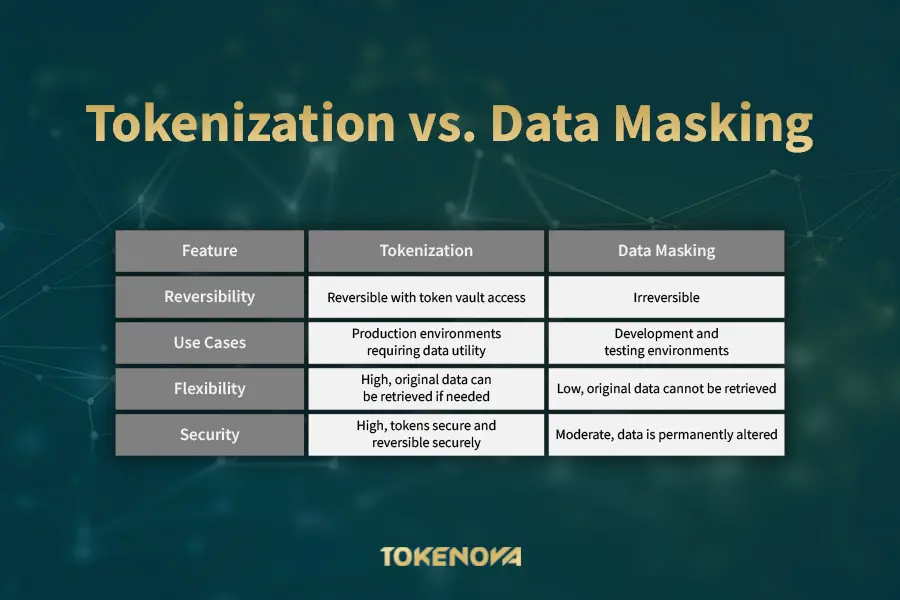

Tokenization vs. Data Masking

Data masking works differently it permanently scrambles sensitive data so it’s unreadable. It’s perfect for testing or training environments where real data isn’t needed. But if you ever need to retrieve the original information, masking won’t work. Tokenization, however, gives you the flexibility to reverse the process securely when necessary.

Why choose tokenization? It’s great for production systems where you need both security and usability, like customer analytics or fraud detection.

Why choose data masking? It’s ideal for scenarios where the original data isn’t needed, like testing software or training employees.

What’s Right for You?

The key is to match the method to your specific needs. Tokenization stands out for its balance between security and usability, making it a smart choice for businesses looking to protect sensitive data without sacrificing functionality.

🗃️Data Analyst Tip: If you’re working in real-time environments like payment processing or data analytics, tokenization is the way to go. On the other hand, if you’re setting up a test system or training team members, data masking can simplify the process while keeping things safe.

Applications of Tokenization Across Industries

Tokenization is a simple yet powerful tool that’s revolutionizing how industries handle sensitive information. By replacing sensitive data with secure tokens, it keeps information safe while still allowing businesses to operate smoothly. Whether it’s securing payments, protecting patient records, or ensuring supply chain transparency, tokenization is making the digital world safer and smarter.

The Backbone of Fintech

If you’ve ever paid with Apple Pay or transferred money via PayPal, you’ve seen tokenization in action. These platforms replace your card details with a unique token during transactions. This means your sensitive information is never exposed, even if the transaction is intercepted.

Tokenization also works seamlessly with new technologies. In cryptocurrency, it secures wallet addresses, making blockchain transactions safer. Meanwhile, payment gateways like Stripe use tokenization to process millions of transactions every day without exposing sensitive data.

Securing Healthcare Data

Healthcare organizations face a tough challenge: keeping patient information private while still making it accessible to professionals and researchers. Tokenization solves this problem. Sensitive Protected Health Information (PHI) can be tokenized, allowing secure sharing for treatment or studies without compromising privacy. During the COVID-19 pandemic, tokenization enabled researchers to share de-identified patient data for vaccine and treatment research. Another example is Electronic Health Records (EHR) systems that use tokenization to ensure patient data is accessible to doctors but secure from breaches. Telemedicine platforms also rely on tokenization to safeguard virtual consultations, ensuring compliance with laws like HIPAA.

E-Commerce: Safer Shopping Experiences

Online shopping wouldn’t be what it is today without tokenization. Platforms like Amazon use it to replace your payment information with secure tokens, ensuring your card details remain protected.

Here’s how tokenization enhances e-commerce:

- It powers one-click payments, allowing you to save your card details securely for faster checkouts.

- It enables subscription services to manage recurring payments safely.

- It lets businesses analyze tokenized data to personalize your shopping experience without accessing sensitive information.

By improving security and streamlining checkout processes, tokenization boosts customer confidence and loyalty.

Streamlining Supply Chains

Supply chains are complex, and tokenization helps keep them secure and transparent. By assigning tokens to products, businesses can track and verify goods, ensuring they’re authentic and tamper-free.

Take De Beers, for example. The company uses tokenization in a blockchain system to trace diamonds from their source to the buyer. This ensures they’re ethically sourced and authentic, reducing counterfeiting and building consumer trust.

Tokenization in Other Industries

The benefits of tokenization extend far beyond payments, healthcare, and supply chains:

Education: Schools use tokenization to protect student records while analyzing data to improve learning outcomes.

Government: Citizen data is tokenized to ensure privacy and comply with national regulations.

Telecommunications: Customer billing data is tokenized to safeguard sensitive information and meet industry standards.

Logistics: Shipment details are tokenized, ensuring secure and accurate tracking.

Challenges and Limitations of Tokenization

Tokenization is a powerful way to protect sensitive data, but it’s not without its challenges. Managing scalability, maintaining speed, controlling costs, and keeping everything secure can feel overwhelming. The good news? These hurdles aren’t insurmountable. With a few smart strategies, you can make tokenization work seamlessly for your business.

Scaling Without the Stress

As your business grows, so does your data. Tokenization systems, especially vault-based ones, can struggle to keep up with increasing demands. Too many lookups or a sudden spike in traffic could cause bottlenecks, slowing everything down.

How to Scale Smartly

Think Distributed: Spread the workload across multiple servers. A distributed system ensures no single server gets overwhelmed, keeping everything running smoothly.

Leverage the Cloud: Cloud-based tokenization services are a lifesaver. They scale automatically with your needs, so you’re never stuck trying to predict future capacity.

Balance the Load: Load balancing is like a traffic cop for your token requests. It keeps things moving efficiently, even during your busiest times.

These approaches ensure your tokenization system grows with you, without the headaches.

Keeping Speed Without Compromising Security

In fast-moving industries like e-commerce or finance, every millisecond matters. Tokenization systems can sometimes introduce delays, especially if they rely on vaults for every lookup. But there are ways to speed things up without sacrificing security.

How to Stay Fast

In-Memory Vaults: Storing tokens in memory (instead of traditional databases) drastically cuts retrieval times.

Cache What’s Common: Frequently used tokens? Keep them in a cache for instant access. It’s like having your favorite snacks on the counter instead of in the pantry.

Process Asynchronously: Handle token requests in the background so your system doesn’t have to wait around for each one to complete.

These tweaks ensure that even in high-pressure environments, your system delivers results quickly and securely.

Balancing Costs Without Sacrificing Results

Implementing tokenization can seem like a significant investment, especially for businesses that need to scale securely while maintaining high performance. The key is not to focus solely on costs but to take a strategic approach to achieve the desired results effectively and sustainably.

A Smarter Path to Success:

Tokenova offers tailored tokenization solutions that align with your business’s goals, providing the tools and expertise necessary to maximize both security and usability. By leveraging advanced technologies and best practices, Tokenova helps organizations implement systems that are not only robust but also efficient.

Here’s how Tokenova drives results:

☑️Custom Solutions: Tokenova adapts its systems to your unique needs, ensuring seamless integration with your existing infrastructure and operational processes.

☑️Expert Guidance: With a team of tokenization specialists, Tokenova ensures every step of your implementation is optimized for both security and performance.

☑️Scalable Infrastructure: Tokenova’s approach ensures your system is ready to grow with your business, eliminating bottlenecks as data demands increase.

By focusing on efficiency, scalability, and expertise, Tokenova empowers businesses to achieve their tokenization goals without compromise. It’s about building systems that protect sensitive data, enhance operational performance, and support long-term success.

Making Tokenization Work for You

Tokenization isn’t perfect, but the challenges are manageable with the right approach. By focusing on scalability, speed, cost, and security, you can build a system that not only protects sensitive data but also supports your business as it grows. The key is to think ahead, adopt smart strategies, and use tools that fit your specific needs.

🗃️Data Analyst Tip: At its core, tokenization is about balancing security with usability. When you get it right, it doesn’t just protect your data it builds trust, streamlines operations, and sets your business up for long-term success.

Future Trends in Tokenization and Data Analytics

Tokenization is rapidly evolving alongside emerging technologies like blockchain, artificial intelligence (AI), and quantum computing. As data becomes more complex and essential, tokenization must adapt to protect sensitive information while enabling advanced analytics. These emerging trends are shaping the future of secure and efficient data usage.

Blockchain and Tokenization: A Seamless Integration

Tokenization is becoming a cornerstone of blockchain technology, particularly in decentralized finance (DeFi). By converting real-world assets such as real estate or commodities into tokens, businesses can securely and transparently transfer ownership. This approach not only reduces reliance on intermediaries but also increases speed and efficiency.

For instance, Propy, a real estate platform, uses blockchain-based tokenization to streamline property transactions. Buyers and sellers can transfer ownership securely without traditional intermediaries, resulting in lower costs, faster transactions, and greater transparency. Additionally, smart contracts automate tokenized asset transfers, eliminating manual oversight and enhancing trust. Meanwhile, decentralized applications (dApps) offer secure, fraud-resistant management of tokenized assets.

AI and Machine Learning: A New Frontier for Tokenized Data

As AI and machine learning become integral to business operations, tokenization is emerging as a critical tool. Tokenized datasets allow companies to train AI models securely, ensuring sensitive data remains protected while enabling valuable insights.

Federated learning takes this a step further, enabling decentralized AI models to train on tokenized data across multiple systems without exposing private information. This method maintains privacy while ensuring robust model training. Similarly, predictive analytics thrives on tokenized data, allowing businesses to identify patterns and trends without compromising security.

The benefits are clear:

- Privacy Preservation: Sensitive data remains protected, even in complex AI workflows.

- Enhanced Security: Tokenized data reduces the risk of breaches in AI-driven processes.

Quantum Computing and the Need for Quantum-Resistant Tokenization

The emergence of quantum computing challenges traditional encryption, demanding new methods to secure sensitive information. Quantum-resistant tokenization is becoming essential to safeguarding data in a future where quantum decryption capabilities could render current systems obsolete.

Leading cybersecurity firms are developing innovative solutions, such as:

- Lattice-based cryptography: Resistant to quantum attacks and already being integrated into tokenization frameworks.

- Hash-based signatures: Secure methods for token generation that withstand quantum decryption.

- Multivariate quadratic equations: Advanced algorithms that create quantum-resistant tokenization systems.

As these technologies become standardized, businesses will have access to more secure frameworks to protect their sensitive data in a quantum-powered world.

Edge Tokenization: Security Where Data is Generated

The rise of edge computing calls for tokenization systems capable of securing data at its source. By tokenizing sensitive information locally, businesses can reduce the risks associated with transmitting data to centralized servers. This is particularly valuable for applications like the Internet of Things (IoT), autonomous vehicles, and smart cities.

An excellent example is Cisco, which uses edge tokenization in its IoT solutions. By processing data locally, Cisco ensures secure and reliable operations while reducing latency. In smart city applications, edge tokenization protects data used in traffic management and public safety, allowing for real-time decisions without compromising security.

The Convergence of Tokenization with Emerging Technologies

The integration of tokenization with technologies like 5G and augmented reality (AR) is unlocking new possibilities:

- With 5G, edge tokenization enables secure, high-speed data processing for mobile networks, making real-time analytics faster and safer.

- In AR, sensitive user data is tokenized and processed locally, ensuring privacy while delivering seamless, immersive experiences.

Looking Ahead: The Future of Tokenization

Tokenization is no longer just about data protection it’s driving innovation across industries. By adapting to technologies like blockchain, AI, and edge computing, tokenization is empowering businesses to use their data securely and effectively. Companies that embrace these advancements will not only safeguard their information but also gain a competitive edge in a rapidly evolving digital landscape.

The future of tokenization lies in its ability to merge security, scalability, and usability a trifecta that will define the next era of data-driven success.

Transform Your Data Security with Tokenova

Tokenova is a leader in providing tokenization solutions across various industries, offering services tailored to meet the unique data protection needs of businesses in fintech, healthcare, and e-commerce. Tokenova’s services include both vault-based and vaultless tokenization systems, allowing organizations to choose the best solution based on their performance and security requirements.

At Tokenova, we do more than provide tokenization systems we partner with you to create tailored solutions that protect your data and empower your business. Whether you’re navigating compliance with GDPR, HIPAA, or PCI DSS, or scaling operations with high-performance systems, Tokenova offers expert consulting to help you achieve your goals.

Our vault-based and vaultless tokenization options are designed to meet your unique needs, ensuring unmatched security, efficiency, and scalability. From seamless integration into your infrastructure to customized strategies that fit your vision, Tokenova ensures your business stays ahead in a rapidly evolving digital world.

Conclusion

In today’s data-driven world, tokenization in data analytics is essential for any organization handling sensitive data. It provides a powerful solution for securing information while enabling businesses to derive valuable insights from their data. Whether in fintech, healthcare, or e-commerce, tokenization allows businesses to comply with strict data protection regulations, reduce the risk of data breaches, and perform real-time analytics without exposing sensitive information.

As technology continues to evolve, tokenization will become even more integral to data security and processing. With emerging trends like blockchain, AI, and quantum computing, the future of tokenization is promising, offering businesses innovative ways to protect their data while staying competitive in an increasingly data-centric marketplace. Organizations that proactively adopt tokenization will not only safeguard their sensitive information but also unlock new opportunities for data-driven growth and innovation.

Key Takeaways

- Tokenization replaces sensitive data with tokens, ensuring data security without compromising its usability for analytics.

- It plays a critical role in industries like fintech, healthcare, and e-commerce, providing a secure way to handle PII, payment data, and PHI.

- Tokenization helps businesses comply with global data protection regulations such as GDPR, HIPAA, and PCI DSS.

- Enhanced data security, real-time analytics usability, and granular analytics capabilities make tokenization a versatile tool for modern data-driven businesses.

- Emerging technologies like blockchain and AI are driving the future of tokenization, offering new ways to secure data and streamline business operations.

- Tokenova provides robust tokenization solutions tailored to various industries, ensuring compliance, scalability, and optimized performance.

What’s the difference between deterministic and non-deterministic tokens?

Yes, cloud-based tokenization services are becoming more common. These services allow businesses to tokenize sensitive data before moving it to cloud storage, ensuring that the data remains secure even when stored off-premises. Leading cloud providers like AWS, Azure, and Google Cloud offer tokenization as part of their security suites.

Can tokenization be used in cloud environments?

Yes, cloud-based tokenization services are becoming more common. These services allow businesses to tokenize sensitive data before moving it to cloud storage, ensuring that the data remains secure even when stored off-premises. Leading cloud providers like AWS, Azure, and Google Cloud offer tokenization as part of their security suites.

How does tokenization impact database performance?

Vault-based tokenization can introduce some latency due to the need for frequent lookups in the token vault. However, vaultless tokenization systems eliminate this bottleneck by dynamically generating tokens without the need for a central vault, improving performance in high-speed environments. Additionally, optimized tokenization solutions like those offered by Tokenova can mitigate performance impacts through advanced caching and distributed architectures.

How easy is it to integrate tokenization with existing systems?

Integrating tokenization with existing systems can vary depending on the complexity of your IT infrastructure. Many tokenization solutions offer APIs and SDKs that facilitate seamless integration with popular platforms and databases. Companies like Tokenova provide comprehensive integration support, ensuring minimal disruption and quick deployment.

What are the cost implications of implementing tokenization?

The cost of implementing tokenization can vary based on factors such as data volume, chosen tokenization method (vault-based vs. vaultless), and specific business requirements. While initial setup costs can be significant, especially for smaller businesses, the long-term benefits of reduced fraud risk and compliance-related savings often outweigh the expenses. Additionally, managed tokenization services can offer scalable pricing models to align costs with usage.